A Closer Look at How IRCC’s Officer and Model Rules Advanced Analytics Triage Works

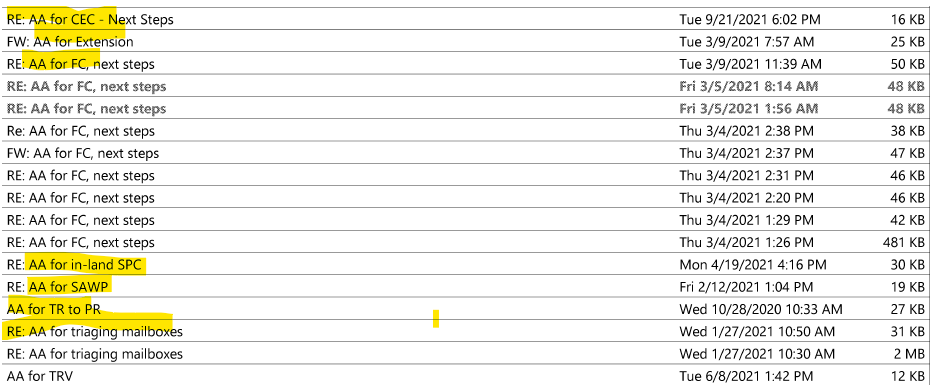

As IRCC ramps up to bring in advanced analytics to all their Lines of Business (LOBs), it is important to take a closer look at what the foundational model, the China TRV Application Process, looks like. Indeed, we know that this TRV model will be the TRV model for the rest of the world sometime this year (if not already).

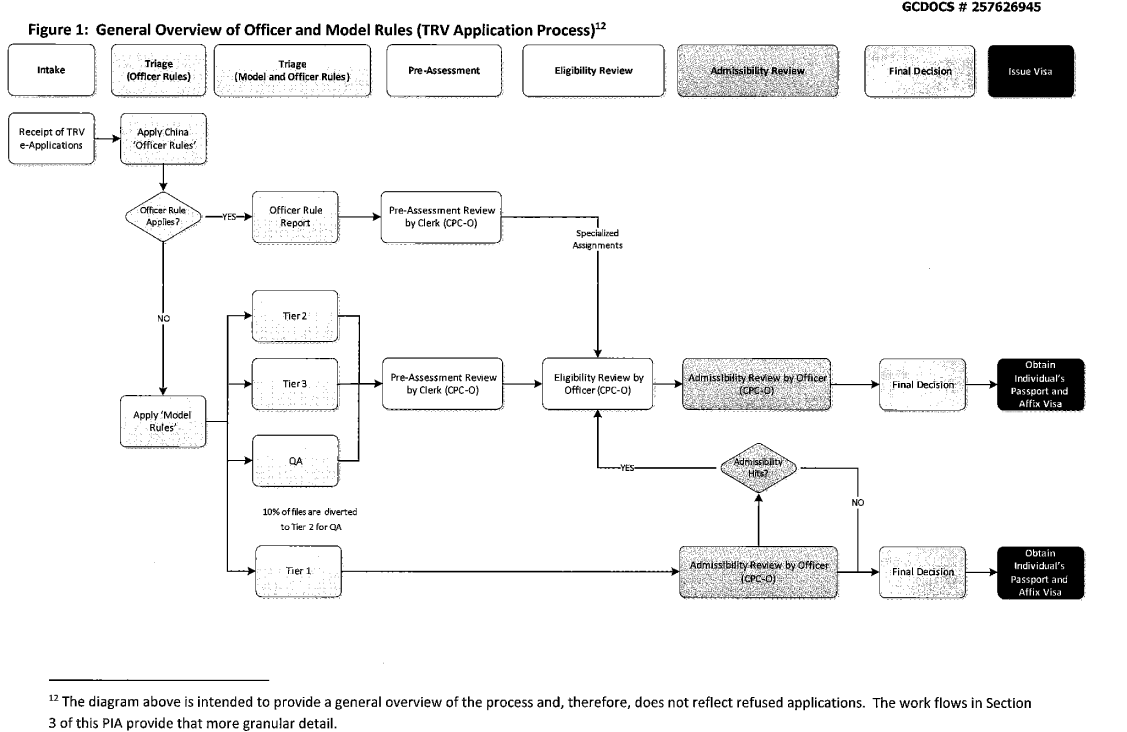

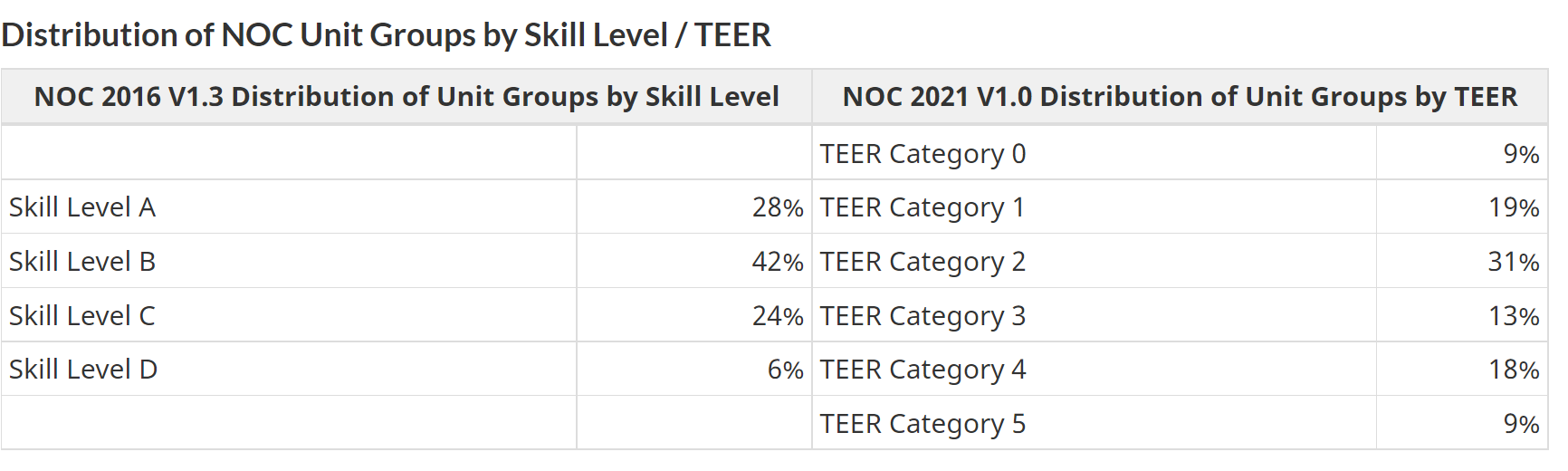

While this chart is from a few years back, reflecting as I have discussed in many recent presentations and podcasts, how behind we are in this area, my understanding that this three Tier system is still the model in place

Over the next few posts, I’ll try and break down the model in more detail.

This first post will serve as an overview to the process.

I have included a helpful chart, explaining how an application goes from intake to decision made and passport request.

While I will have blog posts, that go into more detail about what ‘Officer Rules’ and ‘Model Rules’ are, here is the basic gist of it. A reminder it only represents the process to approval NOT refusal, and such a similar chart was not provided.

Step 1) Officer’s Rules Extract Applications Out Based on Visa Office-Specific Rules

Each Visa Office has it’s own Officer’s Rules. If an application triggers one of those rules, it no longer gets processed via the Advanced Algorithm/AI model. Think about it as a first filter, likely for those complex files that need a closer look at by IRCC.

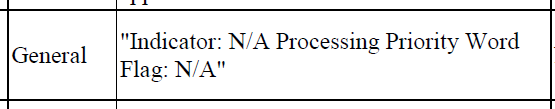

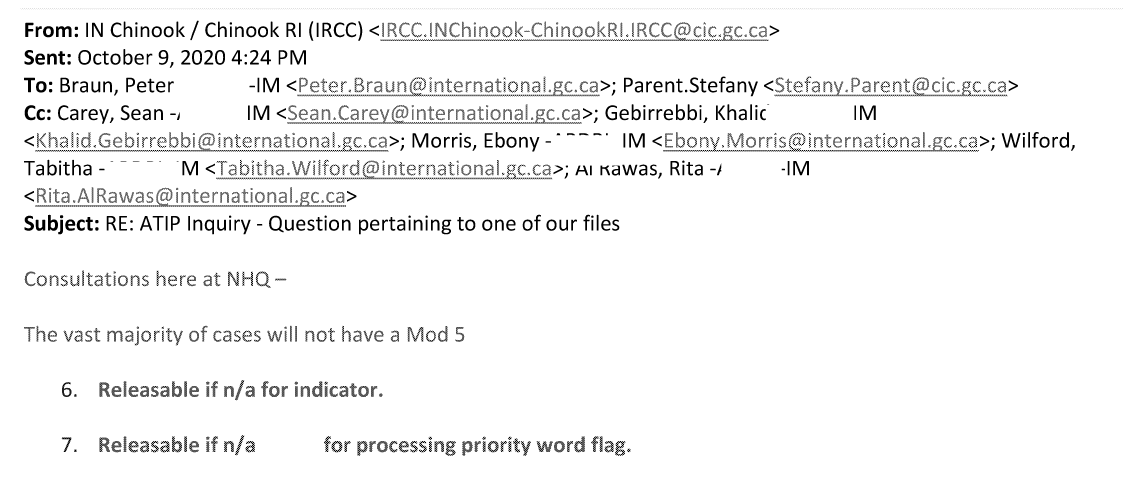

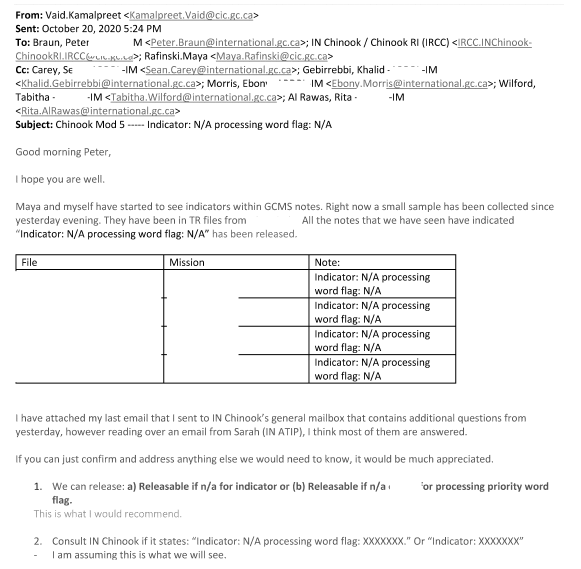

You will recall in our discussion of Chinook, the presence of “local word flags” and “risk indicators.” There is no evidence I have yet which links these two pieces together, but presumably the Officer Rules must also be triggered by certain words and flags.

Other than this, we are uncertain about what Officer’s Rules are and we should not expect to know. However, we do know that the SOPs (Standard Operating Procedures) at each Visa Office then apply, rather than the AA/AI model. What it suggests is that the SOPs (and access to these documents) may have the trigger for the word flags.

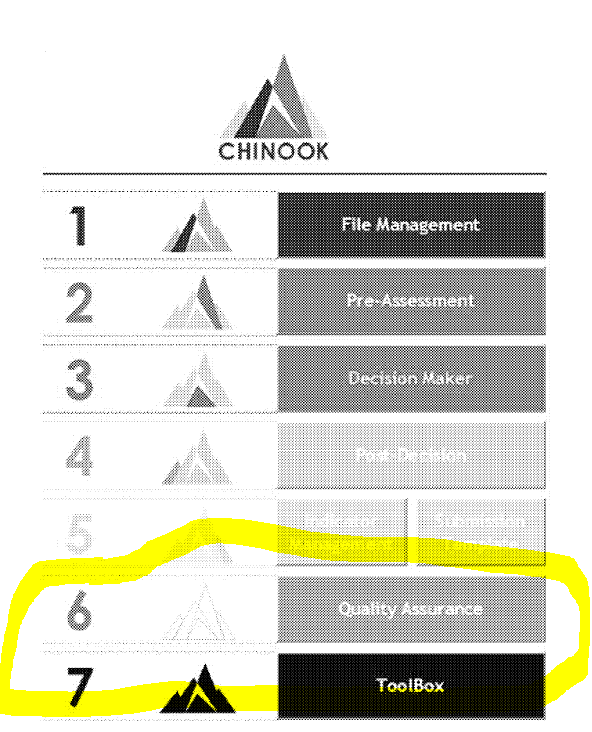

Step 2) Application of Model Rules

This is where the AA/AI kick in. Model Rules (which I will discuss in a future blog post) are created by IRCC data experts to replicate a high confidence ability to separate applications into Tiers. Tier 1 are the applications that to a high level of confidence, should lead the Applicant to obtain positive eligibility findings. Indeed, Tier 1 Applications are decided with no human in the loop but the computer system will approve them. If the Application is likely to fail the eligibility process, and lead to negative outcomes, it goes to Tier 3. Tier 3 requires Officer review, and – unsurprisingly – has the highest refusal rate as we have discussed in this previous piece.

It is those files that are between positive and negative (the ‘maybe files’) and also the ones that do not fit in the Model Rules nor Officer Rules that become Tier 2. Officers also have to review these cases, but the approval rates are better than Tier 3.

3) Quality Assurance

The Quality Assurance portion of this model, has 10% of all files, filtered to Tier 2 to verify the accuracy of the model.

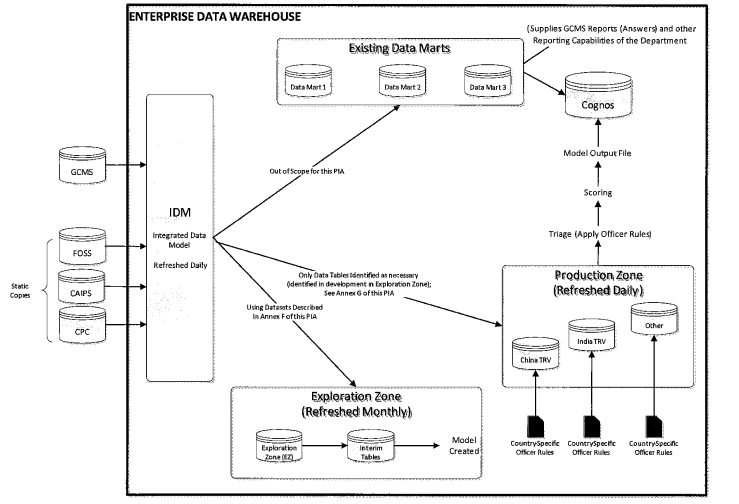

The models themselves become ‘production models’ when a high level of confidence is met, and they are finalize – such as the ones we have seen for China TRV, India TRV, we believe also China and India Study Permits, but also likely cases such as VESPA (yet this part has not been confirmed). Before it becomes a Production Model, it is in the Exploratory model zone.

How do we know there is a high QA? Well this is where we look at the scoring of the file.

I will break down (and frankly need more research) into this particular model later and it will be the subject of a later piece, but applications are scored to ensure the model is working effectively.

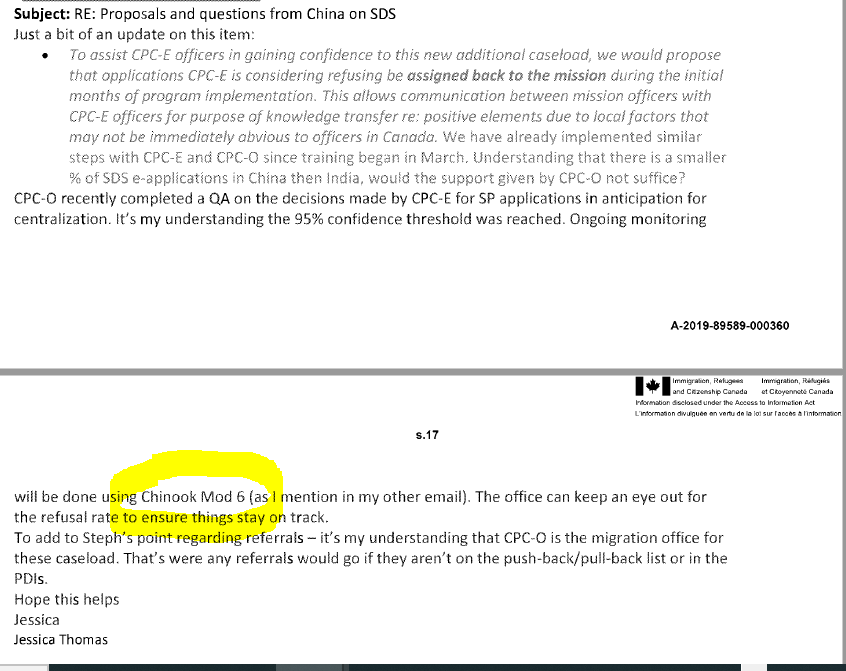

It is interesting that Chinook also has a QA function (and a whole QA Chinook module 6), so it appears there’s even more overlap between the two systems, probably akin to a front-end/back-end type relationship.

4) Pre-Assessment

Tier 1 applications go straight to admissibility review, but those in 2 and 3 go to pre-assessment review by a Clerk.

Important to note here and in the module that these clerks and officers appear to be citing in CPC-O, not the local visa offices abroad. This may also explain why so many more decisions are being made by Canadian decision-makers, even though it may be ultimately delivered or associated with a primary visa office abroad.

But here-in lies a bit of our confusion.

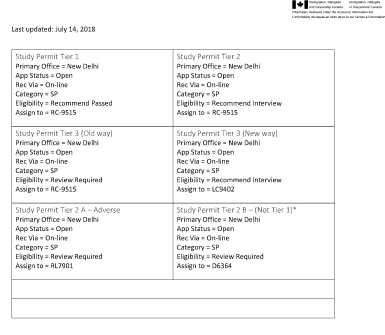

Based on a 2018 ATIP we did, we know that they are triaging different of cases based on case types into “Bins” so certain officers or at least certain lettered numbers – would handle like cases. Yet, this appears to have been the India model then, but the China TRV model seems to centralize it more in Ottawa. Where does the local knowledge and expertise come in? Are there alternative models now that send the decisions to the local visa office or is it only Officer’s rules? Is this perhaps why decisions rendered on the TRVs from India and China are lacking the actual local knowledge that we used to see in decisions because they have been taken outside of the hands of those individuals.

Much of the work locally used to be done on verifying employers, confirming certain elements, but is that now just for those files that are taken out of the triage and flagged as being possible admissibility concerns? Much to think about here.

Again, note that Chinook as a pre-assessment module also that seems to be responsible for many of the same things, so perhaps Chinook is also responsible for presenting the results of that analysis in a more Officer friendly way but why is it also directing the pre-assessment, if it is being done by officers?

5) Eligibility Assessment

What is important to note that this stage is Eligibility where there is no automated approval is still being done by Officers. What we do not know is if there is any guidance directed at Officers to approve/refuse a certain number of Tier 2 or Tier 3 applicants. This information would be crucial. We also know IRCC is trying to automate refusals, so we need to track carefully what that might look like down the road as it intersects with negative eligibility assessments.

6) Admissibility Review + Admissibility Hits

While this likely will be the last portion to be automated, given the need to cross-verify many different sources we also know that IRCC has programs in place such as Watchtower, again the Risk Flags, which may or may not trigger admissibility review. Interestingly enough, even cases where it seems admissibility (misrep) may be at play, it seems to also lead to eligibility refusals or concerns. I would be interested in knowing whether the flagging system also occurs as the eligibility level or whether there is a feedback/pushback system so a decision can be re-routed to eligibility (on an A16 IRPA issue for example).

KEY: Refusals Not Reflected in Chart

What does the refusal system look like? This becomes another key question as decisions are often skipping even the biometrics or verifications and going straight to refusal. This chart obviously would look much more complicated, with probably many more steps at which a refusal can be rendered without having to complete the full eligibility assessment.

Is there a similar map? Can we get access to it?

Conclusion – we know nothing yet, but this also changes everything

This model, and this idea of an application being taken out of the assembly line at various places, going through different systems of assessment, really in my mind suggest that we as applicant’s counsel know very little about how our applications will be processed in the future. These systems do not support cookie cutter lawyering, suggest flags may be out of our control and knowledge, and ultimately lead us to question what and who makes up a perfect Tier 1 application.

Models like this also give credence to IRCC’s determination to keep things private and to keep the algorithms and code away from prying investigators and researchers, and ultimately those who may want to take advantage of systems.

Yet, the lack of transparency and concerns that we have about how these systems filter and sort appear very founded. Chinook mirrors much of what is in the AA model. We have our homework cut out for us.