As a public service, and transparently because I need to also refer to these in my own work in the area, I am sharing three peer reviews that have not yet been published by Immigration, Refugess and Citizenship Canada (“IRCC”) nor made available on the published Algorithmic Impact Assessment (“AIA”) pages from the Treasury Board Secretariat (“TBS”).

First, a recap. Following the 3rd review of the Directive of Automated Decision-Making (“DADM”), and feedback from stakeholders, it was proposed to amend the peer review section to require the completion of a peer review and publication prior to the system’s production.

The previous iteration of the DADM did not require publication nor specify the timeframe for the pper review. The motivation for this was to increase public trust around Automated Decision-Making Systems (“ADM”). As stated in the proposed amendment summary at page 15:

The absence of a mechanism mandating the release of peer reviews (or related information) creates a missed opportunity for bolstering public trust in the use of automated decision systems through an externally sourced expert assessment. Releasing at least a summary of completed peer reviews (given the challenges of exposing sensitive program data, trade secrets, or information about proprietary systems) can strengthen transparency and accountability by enabling stakeholders to validate the information in AIAs. The current requirement is also silent on the timing of peer reviews, creating uncertainty for both departments and reviewers as to whether to complete a review prior to or during system deployment. Unlike audits, reviews are most effective when made available alongside an AIA, prior to the production of a system, so that they can serve their function as an additional layer of assurance. The proposed amendments address these issues by expanding the requirement to mandate publication and specify a timing for reviews. Published peer reviews (or summaries of reviews) would complement documentation on the results of audits or other reviews that the directive requires project leads to disclose as part of the notice requirement (see Appendix C of the directive) (emphasis added)

Based on Section 1 of the DADM, with the 25 April 2024 date coming, we should see more posted peer reviews for past Algorithmic Impact Assessment (“AIA”).

This directive applies to all automated decision systems developed or procured after . However,

- 1.2.1 existing systems developed or procured prior to will have until to fully transition to the requirements in subsections 6.2.3, 6.3.1, 6.3.4, 6.3.5 and 6.3.6 in this directive;

- 1.2.2 new systems developed or procured after will have until to meet the requirements in this directive. (emphasis added)

The impetus behind the grace period, was set out in their proposed amendment summary at page 8:

TBS recognizes the challenge of adapting to new policy requirements while planning or executing projects that would be subject to them. In response, a 6-month ‘grace period’ is proposed to provide departments with time to plan for compliance with the amended directive. For systems that are already in place on the release date, TBS proposes granting departments a full year to comply with new requirements in the directive. Introducing this period would enable departments to plan for the integration of new measures into existing automation systems. This could involve publishing previously completed peer reviews or implementing new data governance measures for input and output data.

During this period, these systems would continue to be subject to the current requirements of the directive. (emphasis added)

The new DADM section states:

Peer review

- 6.3.5 Consulting the appropriate qualified experts to review the automated decision system and publishing the complete review or a plain language summary of the findings prior to the system’s production, as prescribed in Appendix C. (emphasis added)

Appendix C for Level 2 – Moderate Impact Projects (for which all of IRCC’s Eight AIA projects are self-classified) the requirement is as follows:

Consult at least one of the following experts and publish the complete review or a plain language summary of the findings on a Government of Canada website:

Qualified expert from a federal, provincial, territorial or municipal government institution

Qualified members of faculty of a post-secondary institution

Qualified researchers from a relevant non-governmental organization

Contracted third-party vendor with a relevant specialization

A data and automation advisory board specified by Treasury Board of Canada Secretariat

OR:

Publish specifications of the automated decision system in a peer-reviewed journal. Where access to the published review is restricted, ensure that a plain language summary of the findings is openly available.

We should be expecting then movement in the next two weeks.

As I wrote about here, IRCC has posted one of their Peer Reviews, this one for the International Experience Canada Work Permit Eligibility Model. I will analyze this (alongside other peer reviews) in a future blog and why I think it is important in the questions it raises about automation bias.

In light of the above, I am sharing three Peer Reviews for IRCC AIAs. These may or may not be the final ones that IRCC eventually posts, presumably before 25 April 2024.

I have posted the document below the corresponding name of the AIA. Please note that the PDF viewer does not work on mobile devices. As such I have also added a link to a shared Google doc for your viewing/downloading ease.

(1) Spouse Or Common-Law in Canada Advanced Analytics [Link]

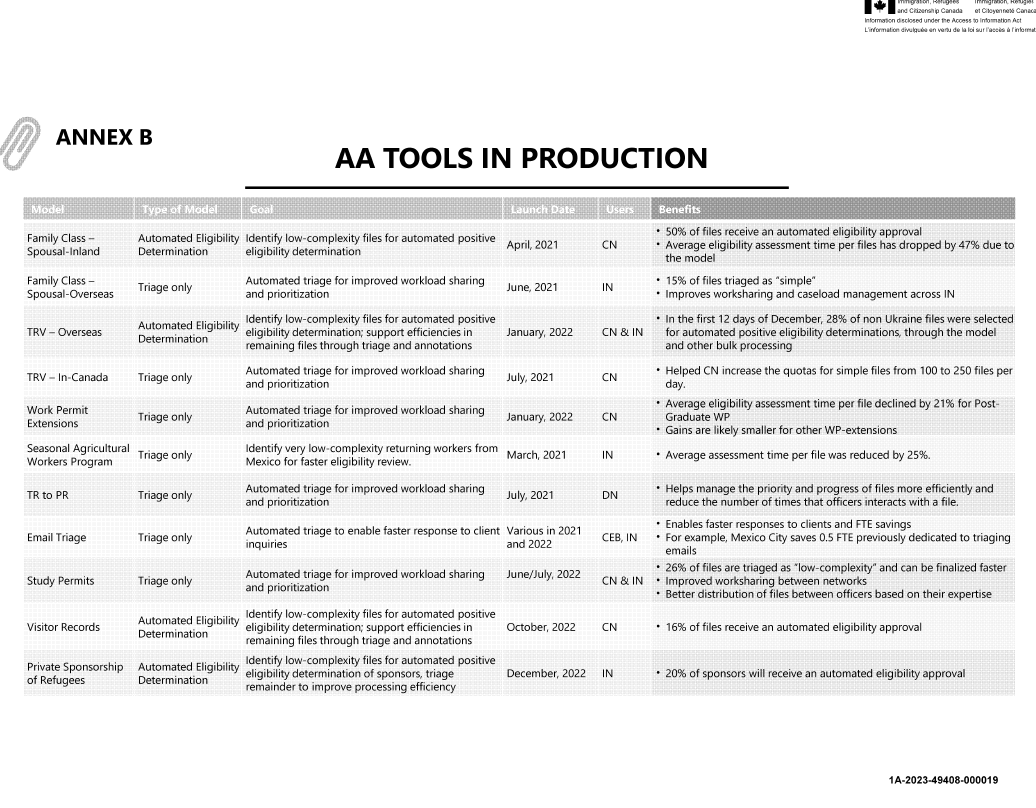

A-2022-00374_ – Stats Can peer review on Spousal AI ModelNote: We know that IRCC has also been utilizing AA for Family Class Spousal-Overseas but as this is a ‘triage only’ model, it appears IRCC has not published a separate AIA for this.

(2) Advanced Analytics Triage for Overseas Temporary Resident Visa Applications [Link]

NRC Data Centre – 2018 Peer Review from A-2022-70246Note: there is a good chance this was a preliminary peer review before the current model. The core of the analysis is also in French (which I will break down again, in a further blog)

(3) Integrity Trends Analysis Tool (previously known as Watchtower) [Link]

Pages from A-2022-70246 – Peer Review – AA – Watchtower Peer ReviewNote: the ITAT was formerly know as Watchtower, and also Lighthouse. This project has undergone some massive changes in response to peer review and other feedback, so I am not sure if there was a more recent peer review before the ITAT was officially published.

I will share my opinions on these peer reviews in future writing, but I wanted to first put it out there as the contents of these peer reviews will be relevant to work and presentations I am doing over the coming months. Hopefully, IRCC themselves publishes the document so scholars can dialogue on this.

My one takeaway/recommendatoin in this context, is that we should follow what Dillon Reisman, Jason Schultz, Kate Crawford, Meredith Whittaker write in their 2018 report titled: “ALGORITHMIC IMPACT ASSESSMENTS: A PRACTICAL FRAMEWORK FOR PUBLIC AGENCY ACCOUNTABILITY” suggest and allow for meaningful access and a comment period.

If the idea is truly for public trust, my ideal process flow sees an entity (say IRCC) publish a draft AIA with peer review and GBA+ report and allow for the Public (external stakeholders/experts/the Bar etc.) to provide comments BEFORE the system production starts. I have reviewed several emails between TBS and IRCC, for example, and I am not convinced of the vetting process for these projects. Much of the questions that need to be ask require an interdisciplinary subject expertise (particularly in immigration law and policy) that I do not see in the AIA approval process nor peer reviews.

What are your thoughts? I will breakdown the peer reviews in a blog post to come.